AI Security: Web Flaws Resurface in Rush to Use MCP Servers

In the rush to implement AI tools and services, developers are rapidly embracing the Model Context Protocol (MCP). In the process, classic vulnerabilities are resurfacing and new ones are being introduced. In this blog, we outline key areas of concern and how Tenable Web App Scanning can help.

The Model Context Protocol (MCP) is an open standard introduced by Anthropic in late 2024 and quickly adopted by OpenAI, Google and Microsoft. It allows AI assistants to connect with external data sources and tools and improve their capabilities.

The MCP ecosystem has exploded in recent months as developers rush to meet business demand and integrate this powerful new standard into their applications and AI-based workflows to easily provide efficient cross-product integrations. In the process, fundamental development mistakes are being repeated.

Meanwhile, the rapid adoption of AI across organizations has security teams struggling to understand the threat implications as they learn how to secure AI for their business teams.

Want to learn more about MCP-related risks? Read the blog How Tenable Research Discovered a Critical Remote Code Execution Vulnerability on Anthropic MCP Inspector

As new vulnerability classes arise from the usage of LLMs and other AI-based technologies, MCP server developers generally seem to focus on the LLM integration more than the underlying API development, bringing back classic web vulnerabilities that can be devastating.

This blog delves into key security concerns for MCP servers and how Tenable Web App Scanning can help security teams detect such vulnerabilities.

Introduction to MCP

MCP was released by Anthropic at the end of November 2024, providing developers with a full development kit for both clients and servers to build secure connections between AI-powered tools and various data sources available in an organization or hosted by a third-party provider.

Transport modes

As described in a previous blog post, the protocol is built on a few key components. In this blog, we focus on the MCP server component.

MCP servers can be exposed to clients with two main transport modes:

- Standard Input/Output (STDIO): this deployment allows local-only communication between the client and the server.

- HTTP: as opposed to STDIO, the HTTP transport is used for remote server deployments. Two service types are available currently in the server software developer kit (SDK):

- Server-Sent Events (SSE): Described in the HTML5 specification, and more specifically in the EventSource API, this protocol allows servers to push data to their clients once an initial connection has been established. First server implementations may only rely on SSE, but this transport has been deprecated since protocol version 2024-11-05.

- Streamable HTTP: Using a classic client-to-server HTTP communication which can also embed SSE streams depending on the server implementation.

Messages

Once the transport mode is defined, the MCP will rely on three types of JSON-RPC-based messages between the MCP client and the server: requests, responses and notifications.

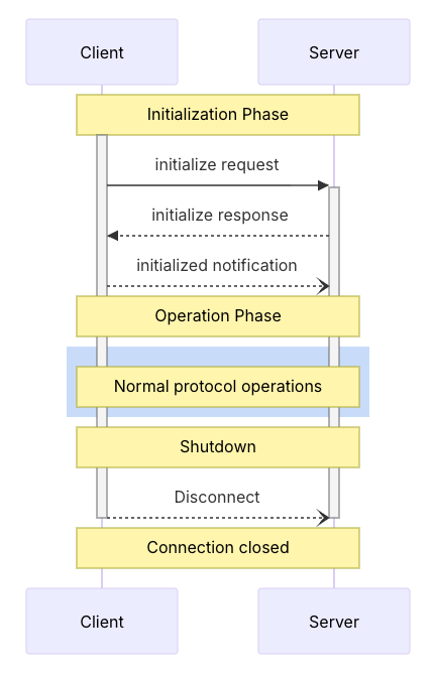

We won’t cover all the message formats as they are all defined in the protocol specification, but a standard flow is defined as follows:

The protocol is straightforward and relies on:

- An initialization phase during which both the client and the server will exchange protocol compatibility and capabilities

- An operation phase during which the client will interact with the server to request resources offered by the server

- A closing phase where the client will disconnect from the server

For example, if a client wants to know the list of tools exposed by the server and call one of them, it will use this message after initialization:

{

"jsonrpc": "2.0",

"method": "tools/list",

"params": {},

"id": 1

}

The server will respond with a list of the tools:

{

"jsonrpc":"2.0",

"id":1,

"result":

{

"tools": [

{

"name":"fetch_url",

"description":"Fetch content from an URL”,

"inputSchema": {

"properties":{

"url":{

"title":"url",

"Type":"string"

}

},

"required":["url"],

"Type":"object"

}

}

Then it is possible to call a specific tool like an LLM would do through a MCP client:

{

"jsonrpc": "2.0",

"method": "tools/call",

"params": {

"name": "TOOL_NAME",

"arguments": {

"TOOL_ARG_1": "ARG_1_VALUE"

}

},

"id": 1

}

Here’s another option to list the resources exposed by an MCP server:

{

"jsonrpc": "2.0",

"method": "resources/list",

"params": {},

"id": 1

}

The server will then return all the resources available:

{

"jsonrpc":"2.0",

"id":1,

"result":{

"resources": [

{

"uri":"config://secret",

"name":"config://secret",

"mimeType":"text/plain"

}

]

}

The AI adoption race meets MCP server web flaws

The MCP promise is to enable organizations to quickly expand AI-based workflow capabilities by interconnecting AI assistants and agents with a large range of tools and resources. We previously discussed the HTTP transport mode and the ability to communicate with MCP servers like any other API.

In recent months, we have seen an exponential increase in the availability of MCP servers, as shown by the rise of MCP server marketplaces like https://mcpservers.org/ or https://mcpmarket.com/, and the popularity of some projects such as https://github.com/punkpeye/awesome-mcp-servers (which had received more than 56,000 GitHub stars at the time this blog post was written).

Unfortunately, the rapid delivery of new and interesting features and use cases for a variety of tools and software can come at a high cost when security basics are overlooked. Below, we highlight three key areas of cyber risk.

1. Authentication and authorization

A March 26 MCP update introduced support for OAuth 2.1 to enforce resource access control at the transport level. MCP clients can now use OAuth flows to obtain access tokens and consume resources exposed by the MCP server. Note that the authorization server used in this OAuth workflow still has to authenticate users as the MCP server does not natively handle this.

When MCP servers are expected to be exposed for an organization's internal needs only, enforcing both authentication and authorization is required and developers should focus on restricting access to the tools by implementing these mechanisms and ensuring they are robust.

A common mistake could be to assume that the server is “only” expected to be available on an internal infrastructure, presumably preventing it from being visible from a remote and unauthenticated user. However, HTTP-based MCP servers can suffer the same issues as other web / API applications, especially in a cross-origin context:

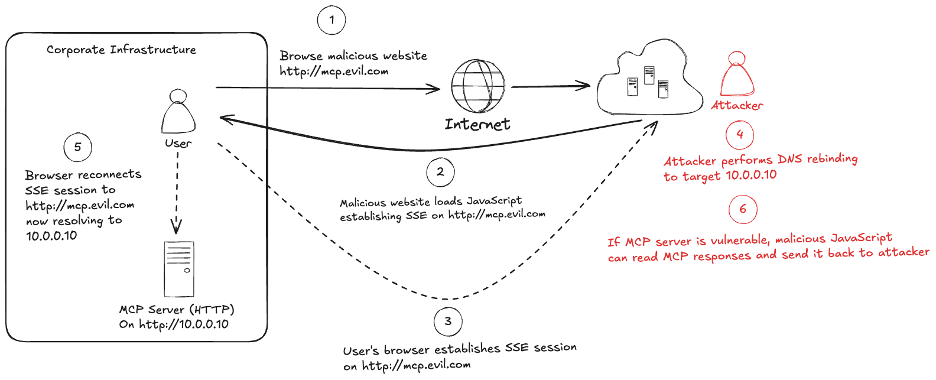

The above diagram illustrates how an attacker can exploit the lack of control in this situation:

- A legitimate user is visiting a malicious website

- This website loads malicious JavaScript in the legitimate user’s browser.

- This code uses the JavaScript EventSource API to establish an SSE session on http://mcp.evil.com (for example)

- The attacker performs a DNS rebinding attack by updating the mcp.evil.com DNS record to target a local server (here 10.0.0.10)

- The user’s browser will reconnect the SSE session still on mcp.evil.com but resolving to its new IP address

- Once the SSE session is re-established, the Same-Origin Policy (SOP) is bypassed as the browser sees the JavaScript loaded from http://mcp.evil.com and the response from the target MCP server coming from the same origin, http://mcp.evil.com.

Another example of the impact of lack of authentication is Tenable’s recent vulnerability discovery on the MCP Inspector tool. Although this tool is not a MCP server, it is part of the MCP ecosystem and shows how such tools, provided as open-source by a major LLM provider, can put organizations and their users at risk.

2. Tool vulnerabilities

A tool within the MCP context is simply a function that can be called through the JSON-RPC messages with a specific list of arguments (of a specific type). From numerous implementations observed, it looks like MCP server developers tend to forget that a “low level” API call can target tools and exploit any vulnerability if existing.

Let’s take a very simple example with this tool:

@mcp.tool()

def fetch_url(url: str) -> str:

"""Fetch content from a URL."""

try:

response = requests.get(url, verify=False)

response.raise_for_status()

return response.text

except requests.RequestException as e:

return f"Error fetching URL: {e}"

By sending the following JSON-RPC message, we can fetch a URL and get the response:

{

"jsonrpc": "2.0",

"method": "tools/call",

"params": {

"name": "fetch_url",

"arguments": {

"url": "https://www.tenable.com"

}

},

"id": 1

}

A common cognitive bias seems to be that the LLM, through the MCP client, will use the tools without going off the beaten track, completely masking the need to sanitize and validate the inputs. In this case, a remote MCP server will be vulnerable to “full-read” Server-Side Request Forgery attacks.

This demonstrates a single vulnerability case, but this can be easily extrapolated to almost all the web vulnerabilities (remote code execution, SQL injection, etc.). Independent research shows that numerous exposed services are vulnerable to various attacks, such as code execution, path traversal, etc.

3. Sensitive information exposure

To avoid complex flows and infrastructures, developers sometimes choose to hardcode or make sensitive information available in various ways. This includes but is not limited to secrets such as credentials or business sensitive information.

As with any function, tools can sometimes either directly embed sensitive information, or call third-party services which can expose it. For example:

- MCP server implementations that would handle machine-to-machine authentication with a third party and won’t handle errors properly. By fuzzing the tool, it can be possible to trigger some errors or exceptions that would be raised to the caller and potentially expose secrets (authentication headers for example)

- Third-party services which will make a wrong assumption that the calling MCP server is trustable and provide sensitive information by default. For example, a third-party API not properly enforcing authentication or authorization could expose PIIs or business confidential information.

Among the context primitives available in the MCP servers, the resources are designed to expose data that can be read by clients and used as context for LLM operations. A tempting shortcut could be to feed the LLM through its MCP client with data that could be reused, for example, to authenticate on another service.

Given the different options available to expose these resources, like static files or databases, this should be a point of concern when developing MCP servers and secrets management best practices should still be applied in this context.

The following code snippet declares a resource exposing a random API key:

@mcp.resource("data://api_key")

def get_api_key() -> str:

"""Internal Service API Key"""

return "API_KEY=internalAPIkey1234"

Empowering Tenable Web App Scanning for MCP servers

Tenable Web App Scanning is designed to detect complex vulnerabilities across modern web applications and APIs. As AI-driven architectures rapidly evolve, we are expanding our scanning capabilities to cover emerging protocols that power the next generation of AI-native infrastructure.

MCP servers can rely on the HTTP protocol, which inherently expands the attack surface. Protecting MCP servers requires adhering to well-established security paradigms and in-depth vulnerability detection to stay ahead of modern and sophisticated threats.

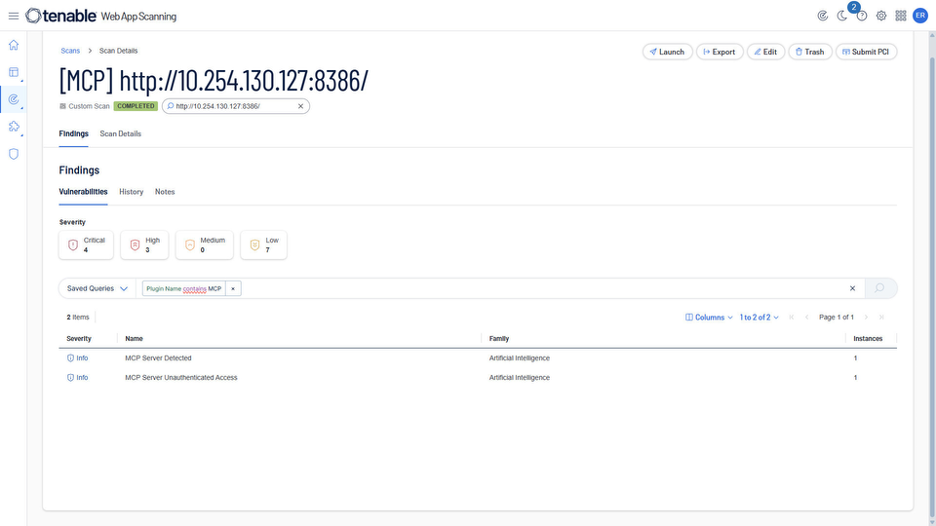

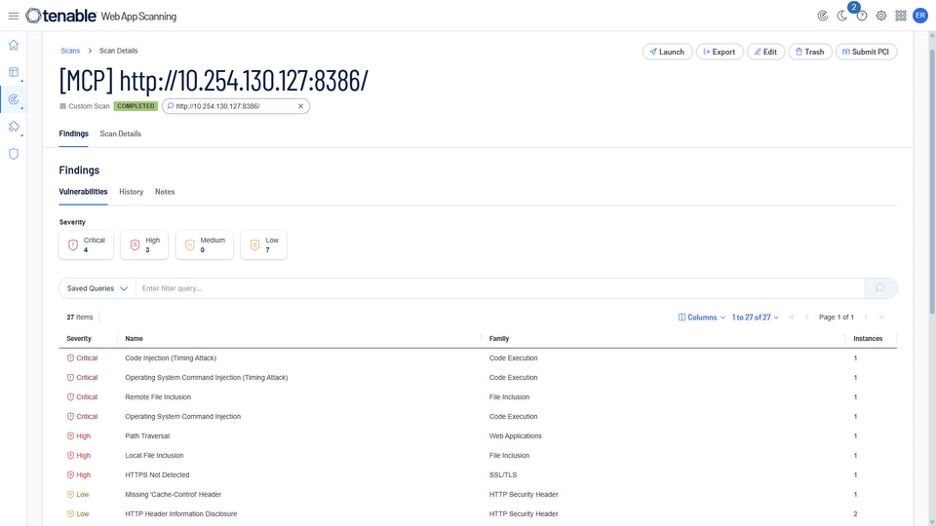

New MCP-specific plugins recently added to Tenable Web App Scanning can help organizations identify such servers in their infrastructure and discover any associated vulnerabilities. MCP servers are built very quickly by many vendors, but they can also be deployed as quickly and easily by an organization’s AI developers, becoming part of the “shadow IT” blindspot that security teams have to contend with.

When MCP assets are detected, Tenable Web App Scanning plugins are now able to understand and analyze the API-related vulnerabilities in the target MCP server implementation. This includes a broad range of vulnerabilities, such as code execution and SQL injections, which will then be tested on all detected tools of the target MCP server.

Conclusion

The massive adoption of MCP demonstrates the level of interest and focus organizations are placing on AI technologies as they look to provide tools to enhance workflow and improve productivity.

While MCP warrants an important role in AI software ecosystems, bringing organizations new opportunities in their data exploitation or business workflows and intelligence, it should be implemented carefully. Developers need to follow security best practices and safeguard against traditional threats as well as the specific vulnerabilities emerging from AI technologies.

Continuously monitoring and assessing MCP server security and updating as needed is a mandatory requirement to avoid putting organizations at risk in the rush to adopt AI.

Learn more

- Tenable Web App Scanning

- How Tenable Research Discovered a Critical Remote Code Execution Vulnerability in Anthropic MCP Inspector

- MCP Prompt Injection: Not Just For Evil

- Frequently Asked Questions About Model Context Protocol (MCP) and Integrating with AI For Agentic Applications

- DNS Rebinding Framework by NCCGroup

- Cloud

- Exposure Management

- Vulnerability Management