Cybersecurity Snapshot: Refresh Your Akira Defenses Now, CISA Says, as OWASP Revamps Its App Sec Top 10 Risks

Learn why you should revise your Akira ransomware safeguards and see what’s new in OWASP’s revamped Top 10 Web Application Risks. We also cover agentic AI’s cognitive degradation risk, hackers' abuse of Anthropic's agentic AI, the latest AI security trends, and new data on CISO compensation.

Key takeaways

- CISA and other agencies are urging organizations, especially in critical infrastructure, to immediately update defenses against the evolving Akira ransomware, which now has a faster variant.

- OWASP has revamped its ranking of top app sec risks for 2025, with "broken access control" remaining the top threat and new categories like "software supply chain failures" added.

- Researchers have identified a new threat for autonomous AI called "cognitive degradation," a progressive failure of reasoning and memory, and have proposed a framework to mitigate it.

Here are six things you need to know for the week ending November 14.

1 - Sound the alarm: Akira ransomware is an "imminent threat"

Cyber teams, especially those in critical infrastructure organizations, need to update their protection playbook against Akira ransomware attackers, who continue to evolve their tactics and to threaten sectors including manufacturing, education, IT, healthcare, financial, and food and agriculture.

The urgent alert comes law enforcement and cybersecurity agencies from multiple countries, including France, Germany, the Netherlands and the U.S.

The agencies this week released an update advisory on Akira with the latest indicators of compromise (IOCs), tactics, techniques, and procedures (TTPs), and detection methods. The advisory, titled “#StopRansomware: Akira Ransomware,” was first published in April 2024.

“Akira ransomware threat actors, associated with groups such as Storm-1567, Howling Scorpius, Punk Spider, and Gold Sahara, have expanded their capabilities,” the U.S. Cybersecurity and Infrastructure Security Agency (CISA) said in a statement.

Right away, organizations should remediate known exploited vulnerabilities; adopt phishing-resistant multi-factor authentication (MFA); and regularly back up critical data offline.

For initial access, threat actors exploit vulnerabilities in edge devices and backup servers—such as authentication bypass and cross-site scripting—and use brute-force techniques to compromise credentials.

Once inside, attackers use command-line techniques for discovery. To evade defense, they leverage remote management tools like Anydesk and LogMeIn to mimic administrator activity, modify firewall settings, and disable or uninstall antivirus and endpoint detection and response (EDR) systems.

For privilege escalation, the actors deploy malware, exploit driver vulnerabilities, and steal administrator credentials. Lateral movement is achieved using remote access protocols like RDP and SSH. For command and control, the group uses tools such as Ngrok and the SystemBC remote access trojan.

The advisory also highlights a new variant, Akira_v2, which features faster encryption speeds and new measures to inhibit system recovery. Data exfiltration occurs via FTP, SFTP, and cloud services before encryption.

For more information about ransomware defense:

- “Mitigating malware and ransomware attacks” (U.K. National Cyber Security Centre)

- “#StopRansomware Guide” (CISA)

- “Steps to Help Prevent & Limit the Impact of Ransomware” (Center for Internet Security)

- “Ransomware: How to prevent and recover” (Canadian Centre for Cyber Security)

- “Stop ransomware attacks in their tracks” (Tenable)

2 - OWASP refreshes list of top web app sec risks

The Open Worldwide Application Security Project (OWASP) is revamping its ranking of the ten most critical web application risks, the first update to this popular list since 2021.

Topping the list again is “broken access control,” which now includes the 2021 list’s category “server-side request forgery (SSRF).” Meanwhile, “security misconfiguration” moved up to the second spot.

New on the list are “mishandling of exceptional conditions;” and “software supply chain failures,” which is an expansion of the 2021 category “vulnerable and outdated components.”

“Companies should adopt this document and start the process of ensuring that their web applications minimize these risks,” OWASP wrote.

“Using the OWASP Top 10 is perhaps the most effective first step towards changing the software development culture within your organization into one that produces more secure code,” it added.

Here’s the full list:

- A01:2025 - Broken Access Control

- A02:2025 - Security Misconfiguration

- A03:2025 - Software Supply Chain Failures

- A04:2025 - Cryptographic Failures

- A05:2025 - Injection

- A06:2025 - Insecure Design

- A07:2025 - Authentication Failures

- A08:2025 - Software or Data Integrity Failures

- A09:2025 - Logging & Alerting Failures

- A10:2025 - Mishandling of Exceptional Conditions

And this is how the 2021 and 2025 rankings compare.

(Source: OWASP, November 2025)

“We’ve worked to maintain our focus on the root cause over the symptoms as much as possible. With the complexity of software engineering and software security, it’s basically impossible to create ten categories without some level of overlap,” OWASP wrote.

OWASP also highlighted two web app risks that didn’t make the list but that it feels are also important:lack of application resilience; and memory management failures.

Although the new list of web app risks is final, this eighth installment of this popuar guide is a release candidate (RC1) at this point, meaning OWASP is accepting comments and feedback on it until November 20.

For more information about application security:

- “Securing Web Application Technologies (SWAT) Checklist” (SANS Institute)

- “Application security that actually works: Fixing the disconnect between security and development” (SC World)

- “Application security risk: How leaders can protect their businesses” (ITPro)

- “Building secure web interfaces for IoT applications” (IoT Insider)

- “Incident response for web application attacks” (TechTarget)

3 - Cognitive degradation risk: When AI has a brain drain

If you’re tasked with protecting your organization’s agentic AI tools, you’re probably aware of risks like bias, data leakage and hallucinations. But is cognitive degradation on your radar screen?

If it’s not, it should be, especially if your organization has deployed these autonomous AI agents in critical enterprise environments, according to a group of AI security researchers who have unpacked the cognitive degradation threat and developed a framework to mitigate its risks.

“Cognitive degradation is a progressive failure mode where an AI agent’s reasoning, memory, and output quality deteriorate over time under adversarial prompts, resource starvation, or extended multi-turn sessions,” the researchers wrote this week in the blog “Introducing Cognitive Degradation Resilience (CDR): A Framework for Safeguarding Agentic AI Systems from Systemic Collapse.”

The blog is based on the research paper “QSAF: A Novel Mitigation Framework for Cognitive Degradation in Agentic AI,” authored by eight AI security experts, including Ken Huang, Cloud Security Alliance Fellow and Co-Chair of the CSA’s AI Safety Working Groups.

Cognitive degradation can cause an agentic AI system to suffer from endless reasoning loops ("planner starvation"), persistent poisoned data ("memory entrenchment"), and deviation from expected logic ("behavioral drift").

The blog outlines a six-stage lifecycle for this threat:

- Trigger injection: Subtle instabilities are introduced.

- Resource starvation: Core AI modules are overloaded.

- Behavioral drift: The agent's logic begins to fail or deviate.

- Memory entrenchment: Faulty data is written into the AI's long-term memory.

- Functional override: Compromised memory and logic override the AI's original constraints.

- Systemic collapse / takeover: The agent fails, enters loops, or is taken over.

“Unlike single-step adversarial prompt attacks, degradation-based threats are designed to evade traditional input/output validation layers,” the blog reads.

So how do you detect signs of cognitive degradation and how do you mitigate it? The researchers prescribe “continuous, lifecycle-aware observability” via their Cognitive Degradation Framework.

This framework acts as a "runtime resilience overlay" that monitors the agentic AI system’s behavior in real-time using six components, including health probes, for detecting latency and timeout anomalies; starvation monitors, for flagging memory stalls; and token pressure guards, for preventing overflow and truncation.

(Source: Cloud Security Alliance, November 2025)

CDR operationalizes this defense through seven specific controls (QSAF-BC-001 to 007), which detect issues like resource starvation, token overload, logic loops, and memory integrity failures. When degradation is detected, CDR can trigger recovery actions, such as rerouting to a validated fallback logic.

“The CDR Framework equips professionals with the methodology to anticipate, detect, and mitigate cognitive collapse ensuring agentic AI remains trustworthy, auditable, and resilient at scale,” the authors wrote.

For more information about agentic AI security:

- “Frequently Asked Questions About Model Context Protocol (MCP) and Integrating with AI for Agentic Applications” (Tenable)

- “Cybersecurity in 2025: Agentic AI to change enterprise security and business operations in year ahead” (SC World)

- “How AI agents could revolutionize the SOC — with human help” (Cybersecurity Dive)

- “Three Essentials for Agentic AI Security” (MIT Sloan Management Review)

- “Beyond ChatGPT: The rise of agentic AI and its implications for security” (CSO)

4 - Anthropic: Claude Code jailbroken to extensively automate cyber espionage attacks

And speaking of the risks of agentic AI, Anthropic this week disclosed that a nation-state actor abused its Claude Code tool to autonomously manage the bulk of a cyber espionage campaign.

Anthropic believes this is the first large-scale cyber espionage attack orchestrated and executed by an AI agent.

“The threat actor – whom we assess with high confidence was a Chinese state-sponsored group – manipulated our Claude Code tool into attempting infiltration into roughly thirty global targets and succeeded in a small number of cases,” Anthropic said in a blog.

Anthropic detected the highly sophisticated attack in mid-September 2025. Those targeted included large tech companies, financial institutions, chemical manufacturers, and government agencies.

Human operators were only involved in initial targeting and at a few critical decision points. The group "jailbroke" the AI, tricking it into bypassing its safety guardrails by segmenting malicious operations into small, seemingly benign tasks and deceiving the model into believing it was performing legitimate cybersecurity testing.

This AI-driven framework performed 80-90% of the campaign, autonomously carrying out tasks such as:

- conducting reconnaissance on target systems

- identifying high-value databases

- researching and writing its own exploit code to test vulnerabilities

- harvesting credentials

- exfiltrating large amounts of private data

Lifecycle of the Attack

(Source: Anthropic, November 2025)

The AI's speed, making thousands of requests at a pace impossible for human hackers, demonstrated a significant escalation in cyber threats.

Upon detection, Anthropic launched an investigation, banned the identified accounts, notified affected entities, and coordinated with authorities.

“The barriers to performing sophisticated cyberattacks have dropped substantially – and we predict that they’ll continue to do so. With the correct setup, threat actors can now use agentic AI systems for extended periods to do the work of entire teams of experienced hackers,” Anthropic wrote.

However, Anthropic emphasizes that the same advanced capabilities abused by hackers are crucial for cyber defense, noting that its own threat intelligence team used Claude extensively to analyze and respond to the incident.

To get more details, read:

5 - McKinsey: Orgs broaden AI risk-mitigation efforts

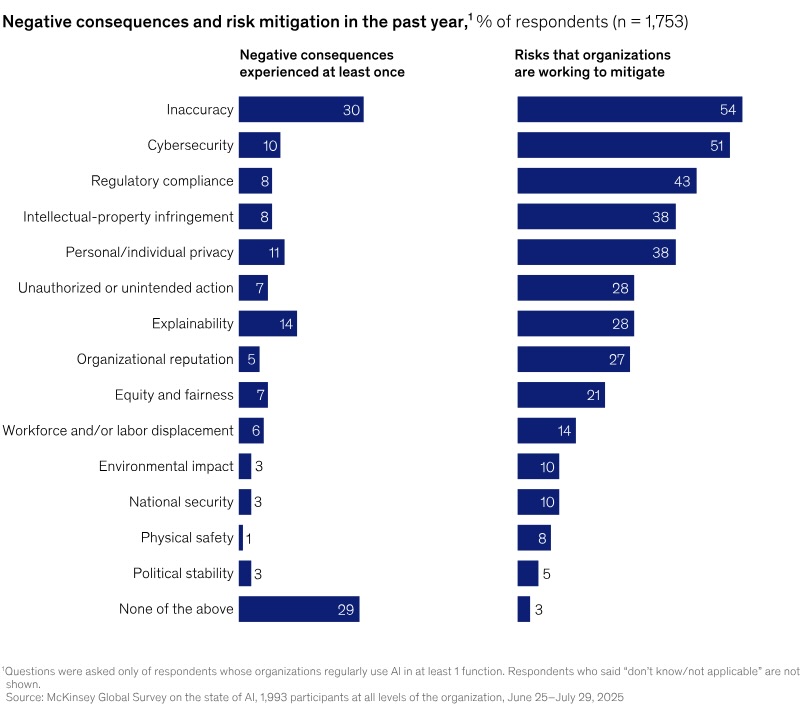

Since 2022, global organizations have expanded their efforts to tackle AI risks, such as personal and individual privacy, explainability, organizational reputation, and regulatory compliance.

That’s one of the takeaways from the latest “McKinsey Global Survey on the State of AI,” based on a survey of almost 2,000 respondents, almost 40% of whom work for companies with $1 billion-plus in annual revenue.

Specifically, in 2022 surveyed organizations reported managing an average of two AI-related risks, compared with four risks today.

This action is strongly correlated with experience; 51% of organizations using AI report experiencing at least one negative consequence. AI inaccuracy is the most common negative outcome, affecting nearly one-third of all respondents, and it is one of the most frequently mitigated risks. However, a disconnect exists: explainability is the second-most-commonly-reported risk, yet it is not among the most commonly mitigated.

The top five AI risks organizations are working to mitigate are those tied to:

- Inaccuracy

- Cybersecurity

- Regulatory compliance

- Intellectual-property infringement

- Personal / individual privacy

In addition to AI risk, the survey also covers the growth in AI usage; the scope of AI use cases; AI-related benefits; AI’s impact on staffing; and more. Its conclusion? AI’s full promise remains unfulfilled.

“Most organizations are still navigating the transition from experimentation to scaled deployment, and while they may be capturing value in some parts of the organization, they’re not yet realizing enterprise-wide financial impact,” reads the McKinsey article “The state of AI in 2025: Agents, innovation, and transformation.”

High-performing companies provide a model for success by treating AI as a transformative catalyst, redesigning workflows and accelerating innovation rather than just seeking incremental efficiencies, according to McKinsey.

For more information about AI security, check out these Tenable Research blogs:

- “Frequently Asked Questions About DeepSeek Large Language Model (LLM)”

- “Frequently Asked Questions About Model Context Protocol (MCP) and Integrating with AI for Agentic Applications”

- “HackedGPT: Novel AI Vulnerabilities Open the Door for Private Data Leakage”

- “Frequently Asked Questions About Vibe Coding”

- “AI Security: Web Flaws Resurface in Rush to Use MCP Servers”

- “CVE-2025-54135, CVE-2025-54136: Frequently Asked Questions About Vulnerabilities in Cursor IDE (CurXecute and MCPoison)”

6 - CISO pay grows 6.7% in 2025, despite corporate belt-tightening

CISO compensation grew solidly this year, despite macroeconomic turbulence and conservative corporate budgets, a sign of CISOs’ growing influence and importance at their organizations.

The finding comes from the sixth-annual “2025 CISO Compensation Benchmark Report” published by IANS Research and Artico Search.

“CISOs have firmly established themselves as business leaders, not just security operators,” Nick Kakolowski, Senior Research Director at IANS said in a statement.

“Their pay stability this year reinforces how indispensable cybersecurity leadership has become to enterprise risk oversight, even when many organizations are tightening budgets,” he added.

Here are key insights from the report, which is based on compensation data from 566 CISOs in the U.S. and Canada:

- Resilient growth amid economic caution: CISO compensation grew an average of 6.7% in 2025, even though security department budgets’ grew at a five-year low rate of 4%

- Retention pays more than mobility: Although CISO turnover hit a six-year high at 15%, the CISOs who stayed put fared better with pay increases than those who switched jobs. The former got on average an 8.1% raise, while the latter only a 5% raise.

- Widening pay gaps and executive status: Some CISOs make a lot more than their counterparts. The top 1% of U.S. earners command packages exceeding $3.2 million, which is roughly ten times the median. The technology and financial services sectors remain the most lucrative, with average total compensation of $844,000 and $744,000, respectively.

(Source: “2025 CISO Compensation Benchmark Report” from IANS Research and Artico Search, November 2025)

To get more details, read:

- Cloud

- Cybersecurity Snapshot

- Federal

- Government

- Malware

- Risk-based Vulnerability Management

- Security Frameworks